#AWS EC2

Explore tagged Tumblr posts

Text

How to download the PEM file from aws ec2

Learn how to download and secure a PEM file from AWS EC2. Step-by-step guide, best practices, and troubleshooting to prevent lost key issues.

If you are working with AWS EC2 instances, you need a PEM file (Privacy Enhanced Mail) to connect securely via SSH. However, once an EC2 instance is launched, you cannot download the PEM file again from AWS. This guide will show you how to download and secure your PEM file correctly. Table of Contents Introduction to PEM Files Importance of PEM Files in AWS EC2 How to Download a PEM File from…

0 notes

Text

Django Project Deployment on AWS

Step-by-Step Guide to Deploying Django on AWS

Introduction Deploying a Django project on AWS allows for scalable, reliable, and high-performance web applications. AWS provides various services to host your application, manage databases, and scale your infrastructure. This guide will walk you through the steps of deploying a Django project on AWS using EC2, RDS, S3, and other related services. Overview The deployment process…

0 notes

Text

AWS helps Owkin careers precision medicine generative AI

Owkin careers(Owkin), a tech-bio company that uses artificial intelligence and human judgment to match each patient with the best course of treatment, announced today that it is collaborating with AWS to develop AI diagnostics, de-risk and expedite clinical trials, and revolutionize drug discovery. Owkin intends to utilize AWS’s dependable, secure, and expansive cloud platform in addition to its proven global infrastructure to improve data operations, achieve operational excellence, and further precision medicine research.

“Owkin was founded on the belief that technologies that securely and privately extract insights from hospitals and research facilities’ massive amounts of patient data will be key to precision medicine’s future,” says cofounder and CEO Thomas Clozel. Collective intelligence through teamwork and cutting-edge technology are our greatest assets. They can expand their research by working with AWS to leverage the cloud’s strength, security, and adaptability.

By utilizing machine learning and high-performance computing, Owkin careers and AWS hope to spur biotech innovation. Through the integration of AWS’s secure and scalable cloud infrastructure and services with Owkin’s specialized biotech research and development, the company is able to meet its storage needs up to petabytes develop generative AI applications, and create and modify foundation models. In order to improve patient health outcomes, Owkin careers will use Amazon SageMaker to develop, train, and implement machine learning models, optimize the processing of high-quality data at scale, and advance the deployment of its portfolio of AI solutions through research partners, pathology labs, and biopharma.

In order to solve important precision medicine challenges and determine the best course of action for each patient, Owkin careers and specialists from AWS’s Healthcare and Life Sciences team, who bring practical experience from the pharmaceutical, medical device, biotech, and government health and regulatory organizations will collaborate to leverage technology. Owkin careers and AWS hope to use their respective areas of expertise to support groundbreaking discoveries and propel the creation of game-changing solutions for global healthcare issues.

“The cloud and the advent of computational biochemistry significantly accelerated the pace of innovation in healthcare and life sciences, and applying generative AI enables another huge leap forward,” stated Dan Sheeran, General Manager of Healthcare and Life Sciences at AWS. They are thrilled to work with Owkin to provide flexible, safe, and scalable infrastructure as well as purpose-built generative AI tools to help them reduce costs and find and develop better, more targeted treatments more quickly.

Owkin intends to leverage AWS’s cutting-edge networking and scalability, along with its high-performance, affordable, and scalable infrastructure, to develop and implement its applications. These applications will include Amazon Elastic Compute Cloud (Amazon EC2) UltraClusters of P5 instances, which are driven by NVIDIA H100 Tensor Core GPUs. Using second-generation Elastic Fabric Adapter (EFA) technology, these instances support networking rates of up to 3,200 Gbps.

This allows Owkin to scale up to 20,000 H100 GPUs in EC2 UltraClusters for on-demand access to supercomputer-class performance. Machine learning model training times are sped up with P5 instances, going from days to hours. Additionally, Owkin Careers plans to test out AWS chips designed specifically for cloud computing, such as AWS Graviton, a general-purpose CPU that offers the best value performance for a variety of workloads, and AWS Trainium, a high-performance machine learning accelerator.

According to the AWS Digital Sovereignty Pledge, Owkin will have complete control over their data by utilizing some of the most robust sovereignty controls available today, such as data residency guardrails, specialized hardware and software to prevent outside access during processing on Amazon Elastic Compute Cloud, and data encryption whether the data is in transit, at rest, or in memory (Amazon EC2). As a result, Owkin careers will be able to maintain its solid and safe partnership building while producing and working with the best possible data. Additionally, Owkin careers will gain from AWS’s extensive compliance controls, which support 143 security standards and compliance certifications and assist partners in meeting global regulatory requirements.

Concerning Owkin Careers

A TechBio company called Owkin makes sure every patient receives the best care possible by fusing artificial and human intelligence. AI helps us comprehend complex biology, which helps us find new treatments, expedite and reduce risk in clinical trials, and create AI diagnostics. Owkin unlocks the potential of artificial intelligence to power precision medicine by leveraging privacy-enhancing federation to access current multimodal patient data. We combine cutting-edge AI methods with wet lab experiments to build a potent feedback loop for accelerated innovation and discovery.

MOSAIC, the largest multi-omics atlas for cancer research worldwide, was founded by Owkin.

Through investments from prestigious biopharma companies (including Sanofi and BMS) and venture funds (including Fidelity, GV, and BPI), Owkin has raised over $300 million.

MOSAIC & Owkin: Building the World’s Largest Spatial Omics Dataset in Oncology

MOSAIC and Owkin are two entities working together on a groundbreaking initiative: creating the largest spatial omics dataset in oncology. Here’s some key information:

MOSAIC:

Stands for Multi Omic Spatial Atlas in Cancer.

It’s a large-scale research project led by Owkin with partners like NanoString and research institutions.

Aims to collect and analyze 7,000 tumor samples across seven different cancer types (e.g., lung, breast, glioblastoma).

Uses cutting-edge spatial omics technologies to map cancer cells and their surrounding environment at high resolution.

Owkin:

An AI biotech company specializing in artificial intelligence and data science for oncology research.

Provides foundational expertise in AI, data science, and oncology for the MOSAIC project.

Develops the data platform and applies AI/ML to analyze the MOSAIC data and unlock new insights.

What makes MOSAIC unique?

Scale: 100x larger than existing spatial omics datasets, offering unprecedented depth and granularity.

Multimodal: Combines various data types like gene expression, protein levels, and spatial information.

AI-powered: Leverages Owkin’s AI expertise to extract meaningful insights from the vast dataset.

Potential impact:

Advanced drug discovery: Identify new drug targets and develop more effective therapies.

Personalized medicine: Understand individual tumors better and develop tailored treatment approaches.

Improved diagnosis: Develop non-invasive methods for early cancer detection and more accurate diagnoses.

Read more on Govindhtech.com

0 notes

Text

youtube

#youtube#video#codeonedigest#microservices#aws#microservice#awscloud#ec2 instance#aws ec2#aws cloud#aws course#nodejs module#nodejs express#node js#nodejs#node

0 notes

Text

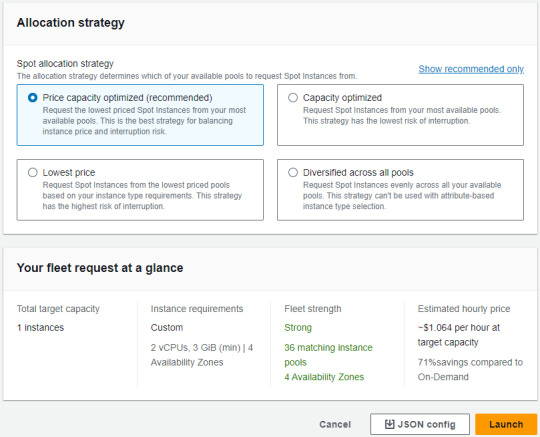

How to set up AWS EC2 Hot-instance

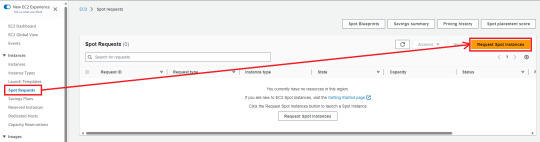

1. Click "Spot Requests" (in Instances) -> "Request Spot Instances"

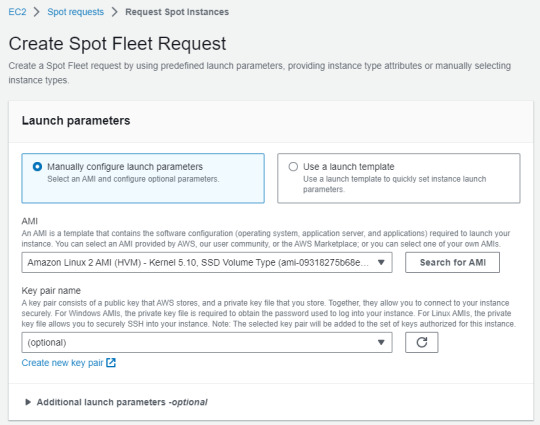

2. Depending on your demand, choose AMI

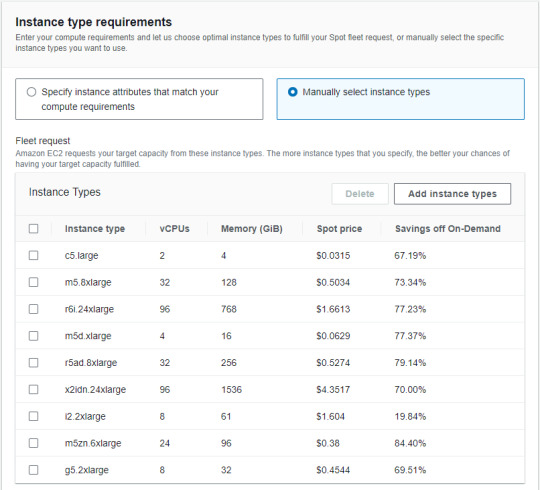

3. Choose "Manually select instance types" (in Instance type requirements)

Tips for easily getting instances

Need to select multiple instance types for the specs you want to utilize (This is to make it as affordable as possible) The more popular and utilized an instance is, the more likely it is that a spot request will be fulfilled

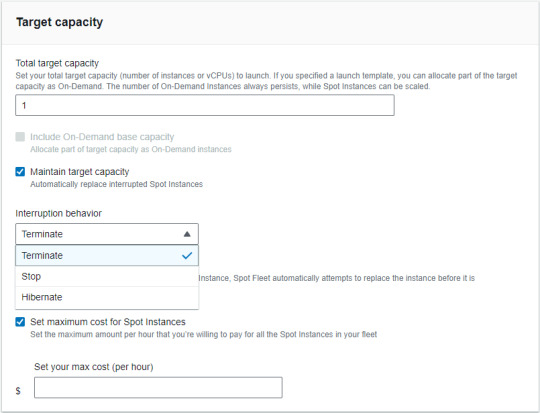

4. Move "Target capacity" -> Setting conditions ("Maintain target capacity" & "Set maximum cost for Spot Instances")

Tips for settings these conditions

Maintain target capacity -> Interruption behavior (This is automatically behavior as interrupted Spot Instances) Set maximum cost for Spot Instances (Recommended that the max cost be lower than on-demand)

5. Setting "Allocation strategy" -> Click "Launch"

0 notes

Video

youtube

Lambda Lab | Start and Stop EC2 Instances using Lambda Function | Tech A...

0 notes

Text

64 vCPU/256 GB ram/2 TB SSD EC2 instance with #FreeBSD or Debian Linux as OS 🔥

38 notes

·

View notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Video

youtube

Terraform on AWS - DNS to DB Demo on AWS with EC2 | Infrastructure as Code

#youtube#🚀 Terraform on AWS - DNS to DB Demo with EC2 | Infrastructure as Code Learn how to automate AWS infrastructure using Terraform from DNS rou

0 notes

Text

Deploying Node.js Apps to AWS

Deploying Node.js Apps to AWS: A Comprehensive Guide

Introduction Deploying a Node.js application to AWS (Amazon Web Services) ensures that your app is scalable, reliable, and accessible to users around the globe. AWS offers various services that can be used for deployment, including EC2, Elastic Beanstalk, and Lambda. This guide will walk you through the process of deploying a Node.js application using AWS EC2 and Elastic Beanstalk. Overview We…

#AWS EC2#AWS Elastic Beanstalk#cloud deployment#DevOps practices#Node.js deployment#Node.js on AWS#web development

0 notes

Text

The Importance of Data Snapshots

Understanding Amazon Data Lifecycle Manager

The use of pre- and post-snapshot scripts integrated in AWS Systems Manager documents is now supported by Amazon Data Lifecycle Manager. These scripts can be used to guarantee that Data Lifecycle Manager-created Amazon Elastic Block Store (Amazon EBS) snapshots are application-consistent. Scripts provide the ability to flush buffered data to EBS volumes, pause and resume I/O operations, and more. AWS are also releasing a series of in-depth blog entries to accompany this launch that walk you through the process of utilizing this functionality with Windows Volume Shadow Copy Service (VSS) and self-managed relational databases.

Overview of Data Lifecycle Manager (DLM)

To summarize, Amazon EBS volume snapshot creation, retention, and deletion can all be automated with the aid of Data Lifecycle Manager. After completing the necessary steps, like tagging your SSM documents, setting up an IAM role for DLM, and onboarding your EC2 instance to AWS Systems Manager, all you need to do is create a lifecycle policy, tag the relevant Amazon Elastic Compute Cloud (EC2) instances, choose a retention model, and let DLM handle the rest. The policies outline what has to be backed up, when it should be done, and how long the pictures must be kept. See my blog post from 2018 titled “New – Lifecycle Management for Amazon EBS Snapshots” for a comprehensive explanation of DLM.

Consistent with Applications Snapshots

Because EBS snapshots are crash-consistent, they show the state of the corresponding EBS volume as of the snapshot creation date. For many kinds of applications, even those that don’t employ snapshots to record the current state of an active relational database, this is adequate. Application consistency requires accounting for pending transactions (waiting for them to complete or causing them to fail), pausing further write operations for a brief period of time, taking the snapshot, and then returning to regular operations.

And that’s the purpose of today’s launch. The instance can now be instructed by DLM to get ready for an application-consistent backup. Pre-snapshot scripts can handle pending transactions, stop the program or database, freeze the filesystem, and flush in-memory data to persistent storage. After that, the post-snapshot script can thaw the disk, restart the application or database, and reload in-memory caches from persistent storage.

You can use this functionality to automate the creation of VSS Backup snapshots in addition to the basic support for custom scripts:

Both pre- and post-scripts

The updated scripts are applicable to DLM instance policies. Assume for the moment that author have drafted a policy that pertains to a single instance and references SSM documents that have pre- and post-snapshot scripts. The following occurs when the policy is applied according to its schedule:

The SSM document is where the pre-snapshot script gets started.

Every command in the script is executed, and the success or failure of the script is recorded. If the policy allows it, DLM will try unsuccessful scripts again.

For EBS volumes connected to the instance, multi-volume EBS snapshots are started; more management is available through the policy.

Starting with the SSM document, the post-snapshot script executes each command and records the script’s level status (success or failure).

You have control over what happens (retry, continue, or skip) when one of the scripts times out or fails thanks to the options in the policy. In addition to being recorded and published in Amazon CloudWatch metrics and Amazon EventBridge events, the status is also encoded in tags that are automatically applied to every snapshot.

The pre-snapshot and post-snapshot scripts can execute shell scripts, PowerShell scripts, and other operations that are permitted in a command document. The policy’s timeout, which might last anywhere from 10 to 120 seconds, must be completed by the actions.

Starting Out

To create a strong pair of scripts, you will need to have a thorough understanding of your application or database. Your scripts should not just account for the “happy path” in the event of a successful outcome, but also for multiple failure scenarios. A pre-snapshot script ought to, for instance, fork a background task that functions as a backup in the event that the post-snapshot script malfunctions.

Read more on Govindhtech.com

0 notes

Text

#aws cloud#aws ec2#aws s3#aws serverless#aws ecs fargate tutorial#aws tutorial#aws cloud tutorial#aws course#aws cloud services#aws apprunner#aws rds postgres

0 notes

Text

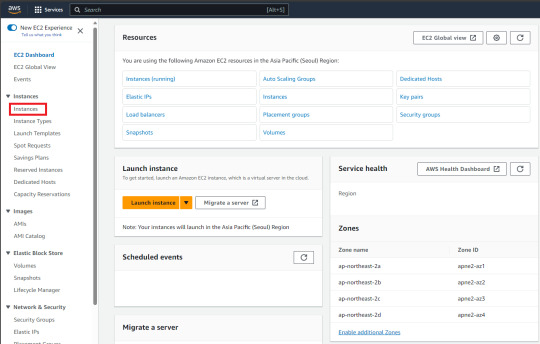

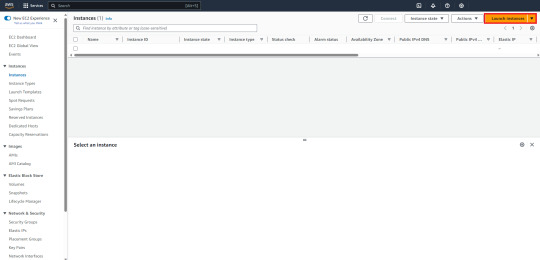

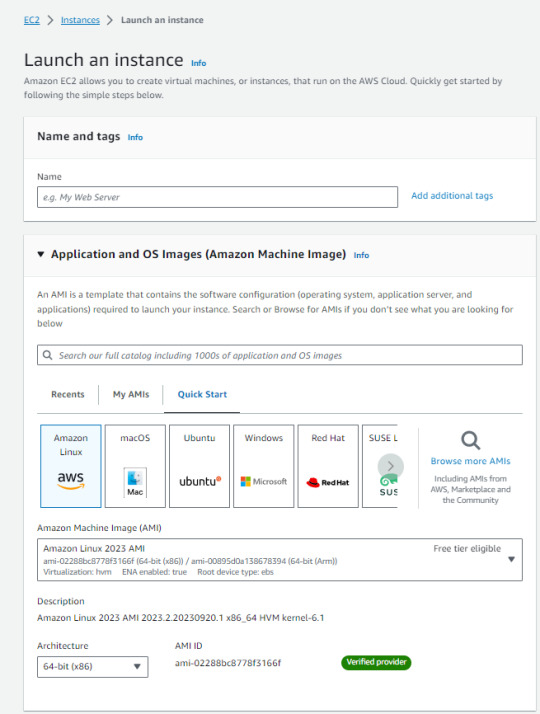

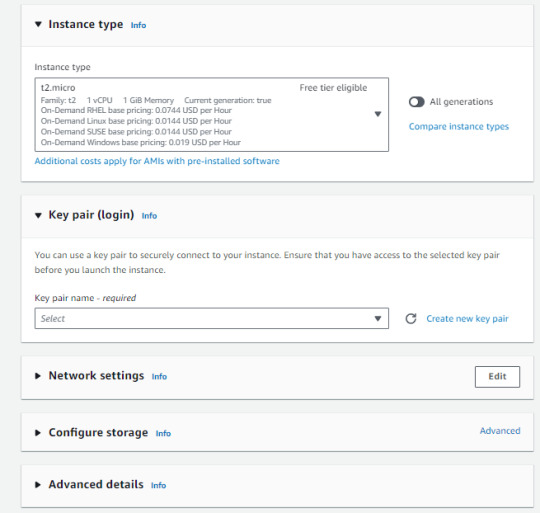

How to set up AWS EC2

1. Eneter the homepage -> Click "Instances"

2. Click "Launch Instances"

3. Setting instances -> Click "Launch Instance"

This is a very important point!

You should choose the "Instance type" based on your demand. It's helpful to look at the "vCPU" and "Memory" requirments. You also need to choose the "Key pair". If you don't have one, click to "Create new key pair" You'll be using it to access your instances, so you need to keep it handly.

0 notes

Text

Hello everyone, guess who has two thumbs and was fired from work today

( Meeeee :3 )

I'm drunk and also the best programmer in the world but what can you do

The two IT directors who hired me three years ago quit and both tried to poach me after they left

(One successfully lol so at least I've got another bun in the oven for now)

And then the new IT director started and everything changed, morale has never been lower, and I was ready to go but still needed the money.

But I was def blind sided by today

I just spent all weekend migrating our MarCom EC2 servers from AL2 to AL2023 along with their respective Wordpress sites, SSLs, and databases.

I was very proud and no one acknowledged it :I So i guess I'm happy to be out of there.

But still. Sad day to be veryattractive.

( they cut off my credentials as I walked out the door, but I still have the api keys to their master database, I should delete all the students debts )

( they have back ups of all the databases anyway that i don't have access to anymore, it would be a lost cause )

1 note

·

View note

Text

Comprehensive AWS Server Guide with Programming Examples | Learn Cloud Computing

Explore AWS servers with this detailed guide . Learn about EC2, Lambda, ECS, and more. Includes step-by-step programming examples and expert tips for developers and IT professionals

Amazon Web Services (AWS) is a 🌐 robust cloud platform. It offers a plethora of services. These services range from 💻 computing power and 📦 storage to 🧠 machine learning and 🌐 IoT. In this guide, we will explore AWS servers comprehensively, covering key concepts, deployment strategies, and practical programming examples. This article is designed for 👩💻 developers, 🛠️ system administrators, and…

#AWS#AWSDeveloper#AWSGuide#AWSLambda#CloudComputing#CloudInfrastructure#CloudSolutions#DevOps#EC2#ITProfessionals#programming#PythonProgramming#Serverless

0 notes